Bye Bye ESXi, Hello XCP-NG

Sometimes the best things in life are free. I've been running ESXi for over a year and was getting that itch. The one to tinker and change things around. I added my 2nd set of hypervisors earlier in the year to match my original 2. Dual node Supermicro servers with Xeon E5-2640v2s and 64gb of RAM each. I did manage to install ESXi on my new nodes, I even moved my networking to dSwitches for the entire cluster which made management easier in the long run. But, I was starting to feel as though ESXi was requiring a bit too much babying for a homelab.

Work spun down a pair of old servers from a VMware cluster I was able to toss the latest vSphere version on to keep up with there. So, no longer needed it at home as I did previously.

Decisions, decisions

Homelab gives a lot of love to Proxmox, which I've tried in the past and never really liked the interface. It's a great platform with a lot of features that make it an impressive fit for homelab - but I just cannot get over some mild gripes I've experienced in the past with them.

Tom Lawrence gives a lot of love to XCP-NG and I've used Citrix previously at a different job. Plus, sometimes it's more fun to buck the trend.

I began by setting XCP-NG up on my 2 new hosts to make sure everything installed fine. The same company that maintains XCP-NG created Xen Orchestra, a vCenter like software, to allow hypervisor management from a web interface. They also have XCP-NG Center if you're interested in a desktop client. Xen Orchestra is available as a free tier with some limitations with paid options available for production use.

Thankfully Jarli01on Github made an awesome script to build an - almost - fully functioning Xen Orchestra VM. I made a new VM with Ubuntu 20.04 and fired that script up. If I remember to, I should probably make a quick how-to on that in case someone is interested.

With hypervisors installed and management taken care of, I had to create my networks. I kept this pretty simple - a pair of bonded NICs. One pair for management based on the 1gbe RJ45 links and one pair for everything else based on the dual 10gb SFP+ links. From the bonds I added VLANs for my storage, DMZ, and production networks.

Network Overview

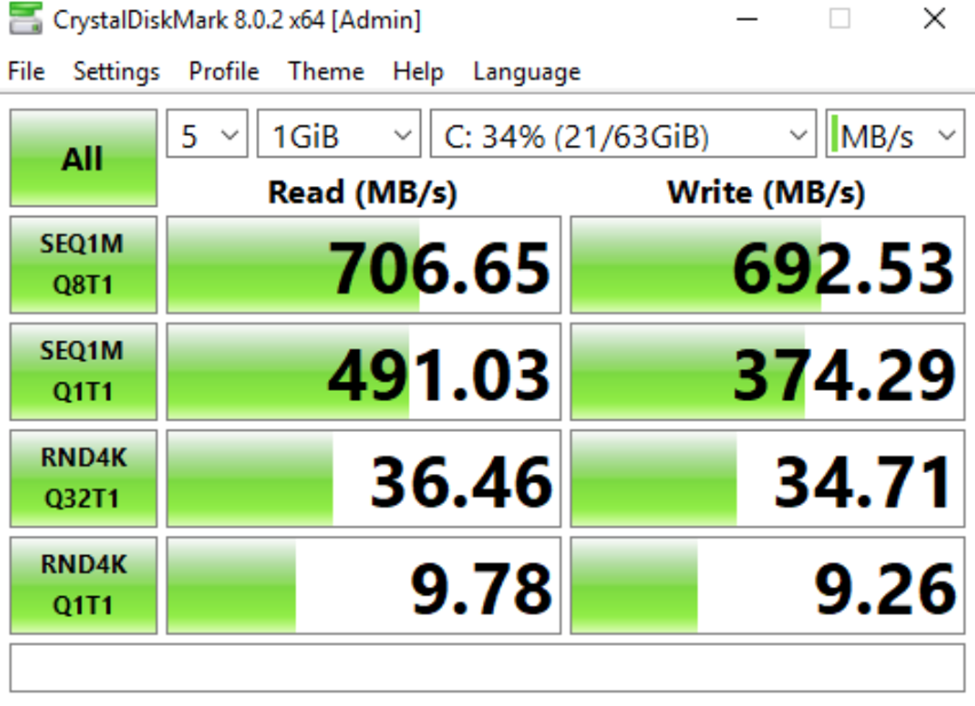

Once the network was all setup I began exporting some test VMs from my vSphere cluster as OVA. I did have to use VMware's OVF tool to make a file that XO could import. That ended up working out well, I started some benchmarks to see where it would sit in comparison, it was mostly inline with my ESXi speeds. I could probably eek a little more out of individual VMs, but I can get this same result running 2 at a time, so I'll chock it up to the Xen drivers.

Being happy with that, it was time to pack up the rest of my VMs and move them over to free up my other 2 hosts. At the end, I had all 4 hypervisors setup with XCP-NG and joined to my pool

All told I've got 128 threads, 256GB of memory, and 80gb of network throughput across the 4 hosts.

Next step is backups... but that's to be continued.